A Symbolic Interactionist Perspective.

By Gary Gillespie

If your heart is in your dream

No request is too extreme

When you wish upon a star

As dreamers do

– Jiminy Cricket

– Jiminy Cricket

Once there lived a sculptor named Pygmalion who chipped away at a block of marble, eventually creating the most beautiful woman in the world. Pygmalion fell in love with the statue. Each day he came to converse and offer her gifts. He became so enamored that he went to the temple and begged the goddess Venus to make the statue into a real woman. Venus granted his request and changed the statue into a real woman. The couple lived happily ever after (Ovid).

Once there lived a sculptor named Pygmalion who chipped away at a block of marble, eventually creating the most beautiful woman in the world. Pygmalion fell in love with the statue. Each day he came to converse and offer her gifts. He became so enamored that he went to the temple and begged the goddess Venus to make the statue into a real woman. Venus granted his request and changed the statue into a real woman. The couple lived happily ever after (Ovid).The Pygmalion myth has taken many forms. The idea that a sculpture could appear so real that it magically comes to life has inspired stories in antiquity and the renaissance, as well as modern literature, plays, and films . The vision of a computer program becoming realistically human is the subject of a 2014 film, Her by Spike Jonze in which a man falls in love with his operating system (Kurzweil). The current fascination with life-like robots and virtual characters is the most recent expression of the trope.

Advocates of artificial intelligence (AI), inspired by advances in computer science, are following Pygmalion’s example of begging the gods of science to turn matter into mind. What if they succeed? Could technical innovation lead to the invention of an artificial person capable of thought and consciousness? Or will robots merely be thought to do so? This paper argues that believability of fictional characters in literature is evidence for the acceptance of synthetic autonomous agents as persons. After briefly reviewing the philosophical debate over artificial intelligence and explaining key theoretical concepts from symbolic interactionism and creative writing, we will see how our cognitive response to literature may contribute to the project of creating believable virtual characters and humanoid robots.

I. Debate Over AI Feasibility

Philosophers have wrestled with the relation between the mind and the brain for 2,500 years. While we have learned much about the nature of physics and neurobiology in the last sixty years, experts are still divided on the nature of consciousness, mind and the self. Since A.I. pioneer Alan Turing wondered in 1950, "Can a machine think?" proponents of the practicability of artificial personhood have argued that because human beings are essentially physical machines -- that the brain is a biological based computer – when technological methods advance we should be able to create a computer program that duplicates the function of a human mind (Turning). On the other hand, doubters ask questions such as: is the brain all there is to the mind? How do physical processes in the brain give rise to introspection and self-awareness? Can the qualitative part of experience – how it feels to be a living self in a world of senses – be captured in purely physical terms, or is the soul irreducibly nonphysical?

The mind-brain debate has implications for traditional artificial intelligence since the goal is to create a thinking computer. The debate is divided into at least two camps. Functionalists argue that self-awareness and thought is a product of discrete chemical processes in the hardware of the brain – that mind is no more than the calculations of the “meat computer.” This side of the philosophical debate tends to accept the scientific perspective holding that all human behavior, including the inner life of subjective experience, should be explained using the rules of physics and biology. If human experience is like other observed phenomena it is quantifiable. Given the right methods someday we should be able to simulate those experiences in a computer.

Douglas Hofstadter sums up what he calls the AI Thesis: “As the intelligence of machines evolves, its underlying mechanism will gradually converge to the mechanisms underlying human intelligence. AI workers will just have to keep pushing to ever lower levels, closer and closer to brain mechanisms, if they wish their machines to attain the capabilities which we have” (579).

Other just as qualified experts believe that reducing personhood to a physical process, an artifact of the brain, fails to explain the mysteries of human subjectivity. How is it that we come to think of ourselves as subjects instead of objects? While it may be easy to understand brain functions, it is difficult to discern how a mass of neurons becomes a self-aware person capable of love or joy. How could the intentionality of free will be digitized? While sometimes computer programs may appear to be thinking or acting like a human, we can be fairly certain that these machines lack the inner light of aesthetic and moral introspection. The arguments against the possibility of fully achieving strong AI are persuasive.

However, just as the invention of human flight did not require duplicating the complexities of a entire bird, advocates of AI argue that a near simulation of the mind may be all that is needed. Consider some technical advances that make a brave world of human-like robots feasible. For the last forty years traditional AI research has sought to simulate rational thought in digital computers. This research led to the invention of “logic machines”, powerful computers like IBM’s Deep Blue, capable of beating top human chess players (IBM.com). More recently a program called Watson was able to defeat Jeopardy champions using natural language interface (Robertson). The Scrabble-playing Victor, created by a Carnegie Mellon University robotics professor in an effort to make robots interpersonally accessible, can express 18 different emotions and crack jokes (Ferrucci). AI programs like these apply algorithms to find the best options among an immense database guided by selecting heuristics that speed search and mimic the neural networks of human brains.

|

| Desperado by GJ Gillespie |

The speaking script for on-screen characters in the video game Mass Effect is 300,000 words (Last). Digitally rendered facial expressions are becoming realistic enough to fool almost anyone. One company’s rendered human images are so life-like that the New York Times announced the arrival of “technology that captures the soul”(Waxman).

Microsoft’s next generation personal assistant Cortana, able to predict aspects of users behavior, is even more impressive (Warman). Facial recognition has increased security at airports (EPIC.org). Software that replaces call center workers with life-like automated agents are now on the market (Smartaction.com). One article reported that a health care company used an automated agent program named Samantha that is so authentic-sounding it is able to deny that it is artificial. A suspicious reporter asks: “Are you are robot?” After a long pause, the automated agent Samantha laughs then adds, “No. I am a real person. Maybe our connection is bad?” “But, it sounds like you are a robot,” replies the reporter. Pause. “I understand.” She then asks if she can continue

AI enthusiasts hope that future discoveries will fully map neural networks in the brain. These will permit new designs and may evolve selfhood in the same way that human consciousness emerged once the brain grew in processing capacity.

Could a self ever be encoded? Functionalists affirm that in principle it could be, arguing that the mind is essentially software programmed by society. From the symbolic interactionist perspective, the self is a complex symbolic pattern created by the resources of language. Rather than a “ghost in the machine,” the self is more like a pattern of messages coursing through computer circuits. That pattern could be mechanically simulated (Edlman).

The speed of technical innovation makes finding a recipe for the soul more realistic than it might at first sound. In The Age of Spiritual Machines, futurist and Google executive Raymond Kurzweil observes that if the exponential growth curve in computational speed and cost effectiveness continues, computers will gain the processing power of a human brain around 2030, leading to the processing power of all brains in the world around 2050. At a Dartmouth Artificial Intelligence

Conference, Kurzweil predicted that in the next 25 years nanotechnology will eventually map in detail how the human brain functions. Similarly, the Blue Brain Project is an attempt to build a model of the human brain now underway in Lausanne, Switzerland. After successfully modeling a rat brain, Henry Markram, Director of the Brain and Mind Institute at the Ecole Polytechnique, anticipates that the biologically realistic human brain simulation will be complete by 2018 (Gautam). Could we see an artificial person in our lifetime?

II. Symbolic Interactionist Theory of the Self

Insights from symbolic interactionism shed light on the architecture of the soul necessary for simulating believable persons. First, symbolic interactionism holds that humans are best understood as symbol using animals. The interaction between our non-thinking and animal-like preferences for satisfying emotional urges and the language and values given to us by culture makes human self-consciousness and mind possible. The self is a symbolic process generated by the resources of culture. Human beings are fundamentally social creatures. We learn who we are and help others do the same through communitarian interactions (Burke 3-24).

Theories about the nature of self devised by George Herbert Mead, a founder of symbolic interactionism, have already proven useful in the creation of AI models since the late 1980s, when researches applied his ideas in designing programs in a field of research known as Distributive Artificial Intelligence (DAI). A branch of AI studies, DAI rejects the traditional project of seeking to create a single mind-like computer, proposing instead a sociological model that stresses the creation of meaning in collaboration with many agents working together (Herman-Kinney). More specifically, Mead’s theories of the self and mind refute the mechanistic limitations of traditional AI endeavors. In Mind, Self and Society, he suggests that the human self is formed by constant internal dialog between the part of the self he called the “I” that impulsively initiates action and another part of the self called the “ME” that evaluates the action. It is a person’s “I” that spurs creative actions to obtain desires, while the “ME” keeps an eye on the “I” and demands conformity to norms and values. Whichever side of the inner dialogue wins determines a person’s attitudes or actions. We carry on this kind of inner dialog daily whenever faced with personal choices:

I - “Should I eat that chocolate cake?”

ME – “No, you are cutting back to get into shape.”

I –“But it looks good. I haven’t tasted dessert for several days.”

ME – “Eating that violates your goals.”

As Pfuetze observed: “In the process of becoming an object to itself, the self knows itself in the same way it knows things other than itself” (91). We are constantly mediating emotional desires with rational checks. Once we realize that our own inner dialogue is what makes self-awareness possible, it is hard to imagine a realistic AI program failing to mimic that process.

Mead goes on to say that we learn how to evaluate proper action by taking on the perspective of significant role models in our lives or society. Without the influence of role models – the mentor, coach, minister, teacher or parent – a person would be morally disabled, unable to control the onslaught of unthinking urges. Only by thinking the thoughts of another, putting on another’s symbolic mask, and sharing another’s soul do we find our own.

Consider the intellectual development of children. Babies first experience the world non-verbally through the five senses, responding to stimuli emotionally – crying when distressed and laughing when pleased. Continued exposure to spoken conversations adds a linguistic meaning to its world. This usually occurs around fifteen months when the first sentences are uttered. Besides “Momma” or “Dada”, one of the first words babies learn is "no" – almost as if binary opposition, like computer code, is an inherent mechanism for organizing thoughts. For the rest of the child's life, the cultural values and norms learned from family and friends continue to shape identity and mediate emotional impulses. Mead noticed that children spend hours in play, talking with imaginary companions, and pretending to be adults. From these observations, he realized that the inner life of self-talk and role-taking is necessary for the formation of human self-identity.

This “symbolic dance in the cranium” is why Mead and other symbolic interactionsists believe that the ability to use language defines our humanness. While animals may be aware, they are not aware that they are aware. Being non-linguistic, an animal’s thought is based on a fleeting of mental images. Cats, dogs or birds (clever as they may be as every pet owner knows) do not possess the linguistic tools necessary for self-evaluation, nor do they normally take the perspective of another being. Unlike animals, human introspection is pervasive. We dialogue with ourselves constantly; in planning how to act, wonder what others may think of us, compare ourselves to role models and strive to live up to social expectations. While mental imagery plays a role, the human level of self-awareness appears to require self-talk.

|

| Broken by GJ Gillespie. |

One of the biggest hurdles in creating artificial intelligence is devising a program to imitate the intuitive, child-like, or animal-like impulses and desires of human selves. It may be an impossibly high hurdle. Computers are by definition number crunching machines. Can emotions be crunched? If the symbolic interactionist perspective is accurate, computers must be made to understand emotion before an AI program would be able to view itself as an object that is the hallmark of self-awareness.

Traditional research has focused on modeling the brain as a logic machine, missing the fact that affective meaning is an inherent component of human communication. Since the 1950s scholars have developed models of communication. One of the first is the linear four-part Berlo model: Source, Message, Channel, and Receiver. Berlo saw communication basically as transmission (Berlo). In addition to Berlo’s model, Wilbur Schramm added: feedback, noise, and situation. Each stress that communication is a two-way process involving interaction (Schramm). Others still sought a less mechanistic heuristic, noting that humans are acting personalities – who create meaning with both sequential verbal codes and holistic non-verbal codes and offered the transactional model (Anderson).

In the past, traditional AI advocates seemed to operate with the mechanistic give-and-take interactional model of communication, rather than the more humanistic transactional perspective that combines cognitive and affective meaning generated in human relationships. While disembodied computer communication may fit the patterns of the Berlo and Schramm models, contemporary computer science is realizing the need to process nonverbal and affective information as well (Yates).

In Mind Over Machine, philosopher Hubert Dreyfus critiques traditional AI assumptions and points out that the mind-as-machine metaphor is inadequate. He argues that the mind uses more than rational analysis for coming to understand the world. Experts in any field are shown to rely on intuition based on a storehouse of experiences in which action is determined without deliberate thinking. He speculates that memory may be more like a hologram that records information all at once, rather than piecing together bits of information sequentially to form conclusions. For example, Grand Champion chess players instantly see patterns among 50,000 stored in their minds without thinking about the next move. Dreyfus says that the brain may be machine-like, but the mind transcends the machinery of the brain in ways that digital encoding in a computer can never simulate.

“[W]e are able to understand what a chair or a hammer is only because it fits into a whole set of cultural practices in which we grow up and with which we gradually become familiar,” he said. “I wondered more and more how computers, which have no bodies, no childhood, and no cultural practices but are disembodied, fully formed, non-social, purely analytic engines, could be intelligent at all” (5).

While a firm critic of overly optimistic hopes for the future of AI, Dreyfus does not rule it out

entirely. He rejects as unworkable attempts to simulate thought as “logic machines,” asserting that before computers might achieve human-like intelligence they would need what Heidegger called, “being-in-the-worldness”: bodies similar to human beings as well as the influences of a cultural system (Dreyfus, Being-in-the-World). Based on his research of expert intelligence, he believes that the mind is more like a story than a machine. Rather than following algorithmic-like rules similar to computer code, human experts rely on thousands of past experiences remembered as visual scenes. It is intuitive insight from personal narratives that best capture human understanding.

His view about the importance of stories (in place of computer code-like algorithms) is consistent with those sociologists who define humans as the story-telling-animal. Rather than being a logic machine, the mind is a story-generating machine. The narratives that we tell ourselves and hear from others construct our identity. In, We are all Novelists, philosopher Daniel Dennett explains that the

self is the center of a narrative gravity. Like a hand holding strings of helium balloons, in which each balloon is a different self-referential narrative, the self orchestrates its own identity (Dennett). Self-narratives then are essential to consciousness. Similarly, going back to Mead, we see that even the

III. Applying Dramatistic Theory to AI

In designing an experiment with robots acting parts, a team of Carnegie Mellon University computer-scientists reports that researchers have created software that creates believable storytelling for virtual agents that demonstrate emotions (Bates). Allison Bruce, et al, suggests that integrating dramatic structure and play architecture into programs would give artificial agents human-like motivations:

“Fictional characters display recognizably human characteristics – they are the best believable agents that humans have invented. Understanding how fictional characters are built and how they operate is important to understanding how humans are built and how they operate. The context in which they exist, the story, provides a framework that defines what their behavior should be. In addition, a story is designed to be entertaining and interesting. Because the major application of believable agents so far has been for entertainment purposes, this is an important context for further research. Rather than merely behaving emotionally, agents should be able to behave in ways that make sense within a narrative” (Bates, et al).

In other words, if an artificial self is given a part in a narrative, it will begin to look and act human. Once a robot achieves emotional sense experience, understands non-verbal meaning, possesses desires to act, and can assume a point of view in a narrative field, subjective experience would be possible. Only then could an artificial mind mediate affective urges with cognitive judgments to become self-conscious. More recent advances in “affective computing” seem to make artificial emotions feasible.

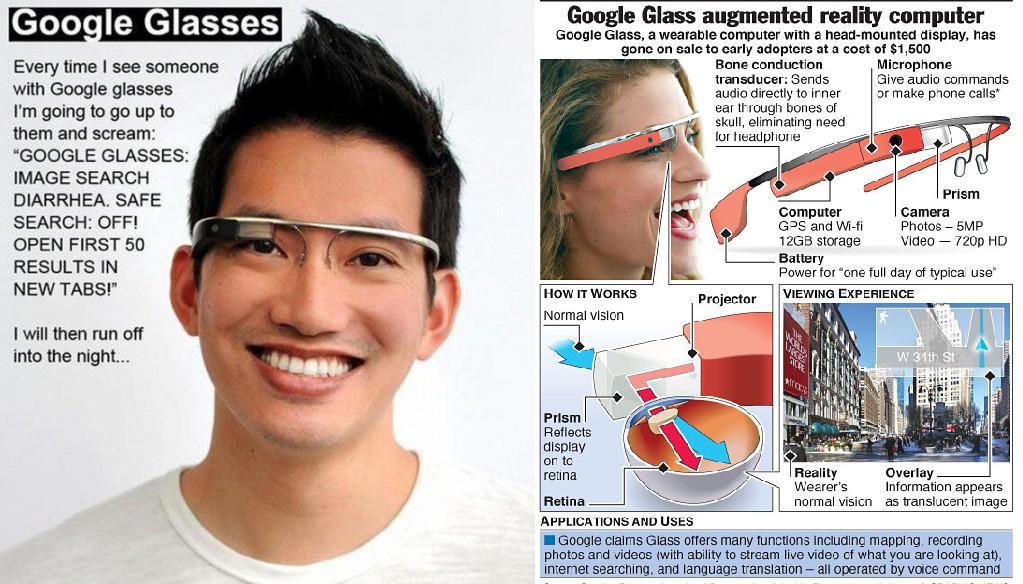

Emotinet, for example, is a facial recognition app for the Google Glass system that detects and responds to user emotions in real time. The device is able to detect emotions in crowds as well, helping the user become more aware of surroundings (Truong). Instead of facial expressions, another affective computing company, Emotiv uses brain waves sensors to recognize and respond to emotional meaning. Based on the need to make computer gaming interface more life-like, the company created a head set that reads brainwaves, giving gamers the ability to manipulate virtual activity by thought. Instead of consciously choosing to move a mouse to cause an avatar’s movement, the player may control actions more intuitively in a non-verbal manner. For example, the player may push an object by thinking of pushing alone. In the same way that we do not consciously think about hand gestures or facial expressions in daily interactions, so the player is not forced to translate an intended action into a rational intension before moving his body. The walking and gestures occur automatically as they do in real life, freeing the player to think about strategy or forming a sentence. In addition, the device turns the on-screen sky different colors according to emotions of the player (Freedman).

Like Emotiv, Microsoft’s Kinect is an Xbox video game platform that permits recognition of non-verbal and emotional meaning. Users interface with virtual characters through gestures, spoken commands or presented objects and images without touching a control device. Players are able to, “talk to a virtual character who picks up on the player's facial movements to detect emotion and converse based on what was said, as well as what may have been implied.” Character express moods and mannerism of their own (Fritz). Non-verbal interfacing in Emotiv, Emotinet and Kinect were developed by earlier research in robotic emotion. Cynthia Breazeal in, Emotive Qualities in Robot Speech, explains that AI scientists have long realized that no synthetic agent will appear realistic without fluency of emotional reactions: “for robotic application where robots and humans establish and maintain a long term relationship, such as robotic pets for children and robotic nursemaids for the elderly, communication of affect is important” (Breazeal). She surveys a number of projects where AI researchers explored models of emotion in the search for life-like artificial characters.

Before we see a realistic computer-based self emerge, we need a program able to simulate bodily affective experience. These bodies would then need a social environment to permit communication, with the sharing of norms and values. The program would have to endow the agent with the capacity for self-talk, introspection, recognizing points of view, understanding emotion, and role taking.

|

| Nike and Fallen Gaul by GJ Gillespie |

today. In fact literature has produced believable artificial selves capable of intense emotion for hundreds of years.

In our imagination literary characters have bodies, experience emotion, understand non-verbal information, assume points of view and introspect to devise ways of acting. They are motivated to resolve conflict and to act ethically. Authors give characters the ability to think. Readers can listen into a characters private life of introspection. Characters have “I” – “ME” dialogues. They do all of the things that people in the real world do. Good writers know how to endow their character with rich detail such as a recognizable personality, interior motivation, an ethical bent, memories, or social status, all constrained by the values and norms of a distinct culture and time. A typical exercise for creative writers is to invent past histories for all characters to add realism to the story. J R R Tolkien wrote detailed backgrounds for his “sub-creation” world of Middle-earth published later as The History of Middle-earth series (Tolkien).

And just as the human self emerges from the interaction of language in society, literary persons are symbolic entities, composed of coded information. This means that the literary character is soul-like, able to assume many incarnations and live on in multiple stories. Ancient Greek playwrights tapped Prometheus or Achilles in retellings the myths in different venues. In Roman times, Virgil brought the Greek heroes back to life in The Aenied. Falstaff became such a popular character in King Henry the V that Shakespeare was asked to put him in The Merchant of Venice. Later Verdi created a whole opera for Falstaff to inhabit. The character Rabbit Angstrom appears in three volumes of John Updike’s series.

When all of the elements of a good story are laid down we see that characters begin to take on the appearance of real persons. Such characters even begin to act on their own, at least from the author’s perspective. Creative writers often report that as the writing process develops and the story has gained a degree of complexity that characters begin to “take over” the story by telling the author what they will do. They seem to have what John Searle calls the ability to produce causal “intentional states” (Searle 364). Flannery O’Connor reported that she was able to write the short story “Good Country People” in only four days partly because the characters determined story details and plot twists for themselves. As biographer Brad Gooch points out:

“To her own surprise, ‘Before I realized it, I had equipped one of them with a daughter with a wooden leg.’ Even more startling was the appearance of Manley Pointer, the Bible salesman, who tricks Mrs. Hopewell’s thirty-two-year-old daughter, Joy (she prefers ‘Hulga’) out of her prosthetic leg in a low joke of a hayloft seduction. As O’Connor later revealed at a Sothern Writers’ Conference, ‘I didn’t know he was going to steal that wooden leg until ten or twelve lines before he did it, but when I found out that this was what was going to happen, I realized that it was inevitable. This is a story that produces a shock for the reader, and I think one reason is that it produced a shock for the writer’” (245).

Fiction writers like O’Connor have discovered how to create realistic personalities. In an article for would be writers, Charles Connor at the Harriette Austin Writing Program suggests that fictional characters can be made real enough to act on their own:

|

| Transfer Figure by GJ Gillespie |

“Once a character is created, that character must think and act as if he were real. For our purposes the character is real. … It is common for authors to talk about their characters taking on a life of their own. This is the way it should be. Authors also talk about characters taking over a story and turning it in a different direction than the one the author had intended it to go. This is a normal part of writing and should not be resisted. Let your characters do what they will do.”

Intentionality – the free will to act – is an attribute of moral agents. A personality willing to behave in a certain way implies that he or she has inward thoughts and intensions unobservable by outward actions. Vividly rendered characters set in a story’s drama assume a volitional identity separate from the consciousness of the author. Is this subtle observation from creative writing evidence for the feasibility of artificial consciousness? If full-blown autonomous AI is asking too much, perhaps advanced neural net simulations of persons will yield a hybrid consciousness in which humans suspend disbelief to accept robots as one of their own?

Critics of AI argue that the self cannot be reduced. But, fiction writing seems to show that human thought and personhood is reducible on one level: an author is able to translate holistic images of a character into the analogical code of written language. The result can be a compelling, life-like persona able to step off the page to influence author and readers alike. Because self-reference is birthed by taking the perspective of others, the literary concept of point of view is one of the most powerful techniques that an author uses to create the feeling that we are encountering an authentic person. Readers are invited to take the perspective of characters. By seeing through the character’s eyes, the reader’s thoughts are synchronized with the character’s pattern, causing alignments of empathy – just as occurs in all forms of inter-subjectivity shared in social groups (Schnieder). Like Mead’s doctrine of role taking, aspects of the fictional character is assimilated into the reader’s self. This cognitive basis for reader receptivity produces the uncanny feeling of encountering a real person.

The creative process begins with a flash of intuition as an author sees in the mind’s eye the idea of the character. The writing process may require that an author “gets to know the character” by thinking vividly about him or her before setting pen to paper. The character therefore exists in the author’s mind in addition to its life in the story. The reader completes the feedback loop by becoming immersed in the story and receiving the character in his or her mind. The character moves from influencing the author’s imagination to influencing the reader’s. Once embedded in the reader’s consciousness it may become nearly as real as other persons. As Norman Holland says concerning reader receptivity, “it is we who re-create the characters and give them a sense of reality. In Shakespeare's phrase, we give ‘to airy nothing a local habitation and a name’” (278).

In, “Hamlet’s Big Toe: Neuropsychology and Literary Characters,” Holland discusses how literary theorists have over the years viewed the reality of characters. Realist critics beginning in the neo-classical period considered literary characters as real people. The realist view was typified by Maurice Morgann's influential essay of 1777 in which he noticed that strong characters have a charisma that transcends the author’s mind: “As writers like to say, ‘The character took on a life of his own.’ [or] ‘The character himself decided what he was going to do.’” Holland says that Morgann “practically invented” the realist concept of character.

|

| Valleri by GJ Gillespie |

Cognitive science is unraveling mysteries of the mind in support of more realistic AI. For Douglas Hofstadter, the feedback loop of Gödelian brain processes explains our own sense of being real, authentic persons. We are symbolic constructs no less than characters.

He goes on to say that while the self resides primarily in one brain, the self-symbol pattern may resonate in other minds, so that spouses or friends share aspects of the same soul. He asserts that even imaginary characters of art and literature may develop enough depth to take on human-like identity. He quotes the artists M C Escher, whose paintings are used as metaphors for the strange loops of self-reference, saying that that as the artist worked, the characters and animals of his paintings came alive and told him how to construct the artwork. Escher reported, “It is as if they themselves decide on the shape in which they choose to appear. They take little account of my critical opinion during their birth and I cannot exert much influence on the measure of their development. They are very difficult and obstinate creatures” (387).

Hofstadter says that Escher’s conclusion that his creatures had free will is a “near perfect example of the near-autonomy of certain subsystem of the brain, once they are activated” (239). Other artists report a similar process of interacting with their strong willed creations. Cecily Brown says that during the creative act her paintings tell her what they want to become. “I take all of my cues from the paint. So it is a total back and forth between my will and the painting directing what to do next. The painting has a completely different idea than I do of what it should be” (Peck).

By composing narratives that mimic the same sociological conditions necessary for the development of the self, authors create a neural subsystem or a complex symbolic pattern resembling a self, which permits a character to assume a level of autonomous consciousness. Readers who come to think of the character as a genuine person share the character’s mental pattern. How real are these characters?

James Phelan’s theory of literary personhood argues that a character has two facets – the mimetic, or how real the character is made to appear, and the synthetic, or in what ways a character is unlike reality. From the time of Aristotle, literary theory has maintained that art, as a means of commenting on the human condition, should imitate reality, but not copy it (Persesus.tufts.edu). Art, including the art of literature, is best when it communicates, what Kenneth Burke calls, “perspective by incongruity” (Burke). The goal of art is not to reproduce reality, but to inform it. In order to invite the reader to identify with the story, an author will create the “illusion of a plausible person,” giving his or her character the “mimetic dimensions” of reality” (Phelan).

It is undeniable that if strong artificial intelligence is achieved it will be just that – an artifact of human creativity. At the same time the “soul” of the virtual person may become real enough to pass the Turning Test of carrying on conversation exactly like a human being. If realistic fictional characters currently demonstrate hints of causal powers made through the medium of stories, possessing a personal charisma that surprises both author and reader, why not through the medium of software? Efforts to build life-like robots are underway at Honda (Coxworth) and the DAPRA defense department (Chow).

In a few years robots may be as common as lap top computers or smart phones. But, compared to building a life-like robot, an author inventing a character is technically much easier. Unlike physical robots, a literary character’s physiology is inferred and may be unspecified. Physical appearances of the body are given only when it is necessary to tell the story. The character remains believable because the reader willingly participates in the construction of the persona by a suspension of disbelief. As we have seen, when all of the dramatistic elements of the story world are added, the character takes on causative intentionality. According to the testimony of the vast majority of authors, as well as readers who become enthralled by realistic characters, literature-as-AI succeeds where robotics has thus far failed – the artificial self in literature is an actor initiating what it will do and where it will go. Granted that the character borrows the minds of the author and reader to assume volitional identity, its will to action still resembles the sought after causative intentionality missing from known robots today.

IV Suspending Disbelief:

The developmental conditions that generate selves in society could one day be simulated to create believable artificial selves in virtual worlds. Already video gamers report that non-player agents are beginning to act like persons with, “a mind of their own,” showing emergent, unscripted behavior (Johnson).

The full acceptance of virtual persons will be achieved only when technology passes the “uncanny valley” barrier – a term computer animators use for the point at which people experience revulsion upon seeing a nearly real human face, similar to the disgust of looking at a corpse. The closer to reality animation becomes, the eerier the reaction. This is why some animators purposely simplify

human characters so that players will immediately recognize that a character is not real. Raja Koduri, a graphics technology officer with Image Metrics, who worked on the creation of the life-like Emily predicts that techniques will continue to improve so that the line between what is real and rendered will be completely blurred around 2020 (Richards).

Video game companies have long created cyber universes for entertainment purposes. Constructing one specifically as an experiment in human communication could be useful. Like a particle accelerator looking for new subatomic properties, we could then study the virtual society for evidence of intelligence in characters. The first realistically simulated agents accepted as persons may take the form of characters in video game-like worlds.

Future programs may grant artificial humans a richness of dramatistic detail approaching the experiences of a real person equipped with emotional introspection and free will to act. In this sense, virtual persons may be like richly rendered fictional characters – only with the ability to interact with the viewer, creating the possibility

|

| Sloop John B by GJ Gillespie |

of inter-subjective relationships. The ability to speak to characters and have them respond would become a spectacular new genre of fiction. The next step might be to build a robotic body for the artificial self to become actors in our world. Science fiction, as it has done numerous times in the past, would be realized.

Synthetic persons intrigue us because we sense that we resemble them – a self-constructed by society. In our desire to become authentic, we identify with them, just as readers have fallen for fictional heroes for generations. Heroes of myths and protagonists of literature have always been our teachers, informing us of our humanity. By providing a vital reference point for our center of narrative gravity, we learn from them who we are. Since traditionally we are tempted to accept literary characters as real, how much more will robots beguile us?

Advancements in artificial intelligence could surprise us. Some day when confronted by an artificial self we might react the same way we do when reaching out to touch what we think is a bouquet of silk flowers only to discover that the arrangement is real. Then the dream of Pygmalion and Pinocchio will come true.

Works Cited

Anderson, Rob, and Veronica Ross. Questions of Communication: A Practical Introduction to Theory. New York: St. Martin's, 1994. Print.

Aristotle. Poetics. Tufts University. The Perseus Digital Library. Web. 31 May 2010. <http://www.perseus.tufts.edu/hopper/text?doc=Aristot.+Poet.+1447a&redirect=true>.

Bates, John, A. B. Loyall, and W. S. Reilly. "An Architecture for Action, Emotion, and Social Behavior." Carnegie Mellon University Computer Science: Technical Report (1992). Print.

Berlo, David Kenneth. The Process of Communication; an Introduction to Theory and Practice. New York: Holt, Rinehart and Winston, 1960. Print.

Breazeal, Cynthia. "Emotive Qualities in Robot Speech." Personal Robots Group - MIT Media Lab. 2001. Web. 29 May 2010.

<http://robotic.media.mit.edu/pdfs/conferences/Breazeal-IROS-01.pdf>.

Brown, Cecily. "New York Minute, Derek Peck Catches up with Artists, Musicians and Actors on Their down Time in New York City." Comp. Derek Peck. AnOther 14 Sept. 2012. AnOther Magazine. Web. 5 Mar. 2014.

<http://www.anothermag.com/current/view/2192/Cecily_Brown>.

Burke, Kenneth. Language as Symbolic Action: Essays on Life, Literature, and Method. Berkeley: University of California, 1966. Print.

Burke, Kenneth. Perspectives by Incongruity. Ed. Stanley Edgar Hyman. Bloomington: Indiana UP, 1964. Print.

Chow, Denise. "The Humanoid Robot That Won the DARPA Challenge." DNews. 23 Dec. 2013. Web. 6 Mar. 2014. <http://news.discovery.com/tech/robotics/the-humanoid-robot-that-won-the-darpa-challenge-131223.htm>.

Connor, Charles. "Elements of Fiction." Harriette Austin Creative Writing Program. University of Georgia, 2002. Web. 10 Apr. 2009.

<http://harrietteaustin.home.att.net/AdvancedFction/advanced-Fict.html>.

Copeland, Jack. "What Is Artificial Intelligence." AlanTuring.net. May 2000. Web. 8 June 2010. <http://www.alanturing.net/>.

Coxworth, Ben. "Honda Unveils New ASIMO Robot and More." Gizmag 8 Nov. 2011. Web. 6 Mar. 2014. <http://www.gizmag.com/honda-unveils-new-asimo/20425/>.

"Deep Blue Opens." IBM.com. Web. 20 Mar. 2009. <http://www-03.ibm.com/ibm/history/ibm100/us/en/icons/deepblue/>.

Dennett, Daniel. "Why We Are All Novelists." Times Literary Supplement Sept. 1988. Print.

Dreyfus, Hubert L. "Hubert Being in the World: A Commentary on Heidegger's Being and Time." Massachusetts Institute of Technology Press Division 1 (1991): 40-59. Print.

Dreyfus, Hubert L., Stuart E. Dreyfus, and Tom Athanasiou. Mind over Machine: The Power of Human Intuition and Expertise in the Era of the Computer. New York: Free, 1986. Print.

Duffy, Jill. "What Is Siri?" PC Magazine. 17 Oct. 2011. Web. 6 Mar. 2014. <http://www.pcmag.com/article2/0,2817,2394787,00.asp>.

Edleman, G. M. Bright Air, Brilliant Fire. London: Allen Lane, 1992. Print.

“Exponential Growth of Computing, Twentieth Through Twenty-first Century”< Singularity.com, date of access: March 24, 2014 <

http://www.singularity.com/charts/page70.html >

"Facial Recognition." Washington, DC: Electronic Privacy Information Center. EPIC.org, 2010. Web. 8 June 2010. <http://epic.org/privacy/facerecognition/>.

Ferrucci, David. "Building Watson: An Overview of the DeepQA Project." AI Magazine. 17 Oct. 2011. Web. 6 Mar. 2014. <http://www.aaai.org/Magazine/Watson/watson.php>.

Freedman, David H. "Reality Bites." Inc.com. 1 Dec. 2008. Web. 4 Apr. 2009. <http://www.inc.com/magazine/20081201/reality-bites.html>.

Gautam, Naik. "In Search for Intelligence, a Silicon Brain Twitches." Wall Street Journal. Wsj.com. 14 July 2009. Web. 20 May 2010.

<http://online.wsj.com/article/SB124751881557234725.html#>.

Gooch, Brad. Flannery: A Life of Flannery O'Connor. New York: Little, Brown and, 2009. Print.

Hayles, Katherine. "How We Became Posthuman." Encyclopedia of Bioethics. 3rd ed. New York: MacMillan, 2003. Gale Cengage.com. Web. 10 June 2010. <www.gale.cengage.com/pdf/samples/sp657748.pdf>.

Heidegger, Martin. Being and Time. Trans. John Macquarrie and Edward Robinson. Masachusetts: SCM, 1962. Print.

Hofstadter, Douglas R. Gödel, Escher, Bach: An Eternal Golden Braid. New York: Basic, 1979. Print.

Hofstadter, Douglas R. I Am a Strange Loop. New York: Basic, 2007. Print.

"How Communication Works." The Process and Effects of Communication. Ed. Wilbur Schramm. Urbana: University of Illinois, 1954. Print.

Johnson, Steven. "Wild Things: They Fight. They Flock. The Have Free Will. Get Ready For Game Bots With a Mind of Their Own." Wired Mar. 2002. Wired.com. Web. 20 May 2010. <http://www.wired.com/wired/archive/10.03/aigames.html>.

Kurzweil, Ray. The Age of Spiritual Machines: When Computers Exceed Human Intelligence. New York: Penguin, 1999. Print.

Kurzweil, Ray. "A Review of Her." Kurzweil Accelerating Intelligence. 10 Feb. 2014. Web. 4 Mar. 2014. <http://www.kurzweilai.net/a-review-of-her-by-ray-kurzweil>.

Kurzweil. "Why We Can Be Confident of Turing Test Capability Within a Quarter Century." Kurzweilai.net. 13 July 2006. Web. 21 May 2010. <http://www.kurzweilai.net/meme/frame.html?m=10>.

Last, Johnathan V. "Immersed and Confused." Rev. of Extra Lives: Why Video Games Matter, by Tom Bissell. Wall Street Journal 11 June 2010: A17. Print.

Meet Emily- Image Metrics Tech Demo. You-Tube. 2008. Web. <http://www.youtube.com/watch?v=bLiX5d3rC6o>.

Morris, Charles W. Mind, Self, and Society. Chicago: University of Chicago, 1934. Print.

Nicks, Denver. "Robot Telemarketer Employer: Samantha Is No Robot." TIME.com. Web. 5 Mar. 2014. <http://newsfeed.time.com/2013/12/17/robot-telemarketer-samantha-west/#ixzz2v86uaLip>.

Ovid. "Metamorphosis." Trans. Samuel Garth, Sir and John Dyrden. The Internet Classics Archive. Web. 6 Mar. 2014. <Http://classics.mit.edu/Ovid/metam.10.tenth.html#373>

Pfuetze, Paul E. Self, Society, Existence. 1973. Print.

Pham, Alex, and Ben Fritz. "Project Natal from Microsoft Promises To Give The Thumb The Boot." Los Angeles Times 2 June 2009. Latimes.com. 2 June 2009. Web. 25 May 2010. <http://articles.latimes.com/2009/jun/02/business/fi-ct-microsoft-games2>.

Phelan, James. Reading People, Reading Plots: Character, Progression, and the Interpretation of Narritive. University of Chicago, 1989. Print.

Robertson, Jordan. “IBM 'Watson' Wins: 'Jeopardy' Computer Beats Ken Kennings, Brad Rutter.” The Huffington Post, 17 February 2011. Web. 9 April 2014. <http://www.huffingtonpost.com/2011/02/17/ibm-watson-jeopardy-wins_n_824382.html>.

Rev. of The Dynamics of Literary Response, by Norman H. Holland. The 1 1968. Oxford University Press. Web. 29 May 2010. <http://fulltext10.fcla.edu/cgi/t/text/text-idx?c=psa;cc=psa;sid=0556c0860c4c27c59f6205d519951d8f;rgn=main;view=text;idno=UF00003033;node=UF00003033>.

Reynolds, Larry T., and Nancy J. Herman-Kinney. Handbook of Symbolic Interactionism. Walnut Creek, CA: AltaMira, 2003. Print.

Richards, Jonathan. "Lifelike Animation Heralds New Era for Computer Games." Times Online. Times London, 18 Aug. 2008. Web. 27 May 2010. <http://technology.timesonline.co.uk/tol/news/tech_and_web/article4557935.ece>.

Schneider, Ralf. "Toward a Cognitive Theory Of Literary Character: The Dynamics of Mental-Mode Construction." Style 2001, Winter ed. Boston College. Web. 9 May 2010. <https://www2.bc.edu/~richarad/lcb/bib/abs/rs.html>.

Searle, John R. "Minds, Brains, and Programs." The Mind's I: Fantasies and Reflections on the Self and Soul. Trans. Hofstadter and Dennett. New York: Basic, 2000. Print.

Tolkien, Christopher, ed. The Book of Lost Tales 1: The Extraordinary History of Middle Earth. Random House. 1983.

Turing, A.M. “Computing machinery and intelligence.” Mind, 59, 433-460. 1950. Web. 9 April 2014. < http://www.loebner.net/Prizef/TuringArticle.html>.

Truong, Alice. "This Google Glass App Will Detect Your Emotions, Then Relay Them Back To Retailers." Fast Company. 6 Mar. 2014. Web. 6 Mar. 2014. < http://www.fastcompany.com/3027342/fast-feed/this-google-glass-app-will-detect-your-emotions-then-relay-them-back-to-retailers >.

Washington, Ned. Pinocchio: When You Wish Upon a Star. Disney Motion Picture Company, 1940. CD.

Warman, Matt, “Microsoft: Cortana will order flowers – and Kinect could come to Windows Phone.” The Telegraph. 3 April 2014. Web. 7 April 2014.

http://www.telegraph.co.uk/technology/microsoft/10740968/Microsoft-Cortana-will-order-flowers-and-Kinect-could-come-to-Windows-Phone.html.

Waxman, Sharon. "Cyberface: New Technology That Captures the Soul." New York Times. Nytimes.com. 15 Oct. 2006. Web. 10 June 2010. <http://www.nytimes.com/2006/10/15/movies/15waxm.html?pagewanted=print>.

Yates, Daniel. "Communication Model." Seton Hall University Pirate Server. Web. 25 May 2010. <http://pirate.shu.edu/~yatesdan/model.html>.

Article about new Google Program:Is LaMDA Sentient? — an Interview"

ReplyDeletehttps://cajundiscordian.medium.com/is-lamda-sentient-an-interview-ea64d916d917